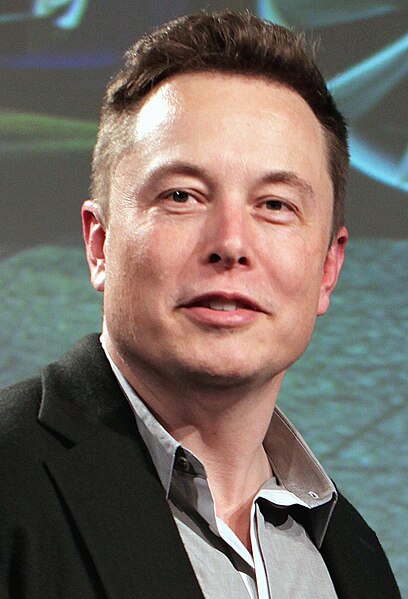

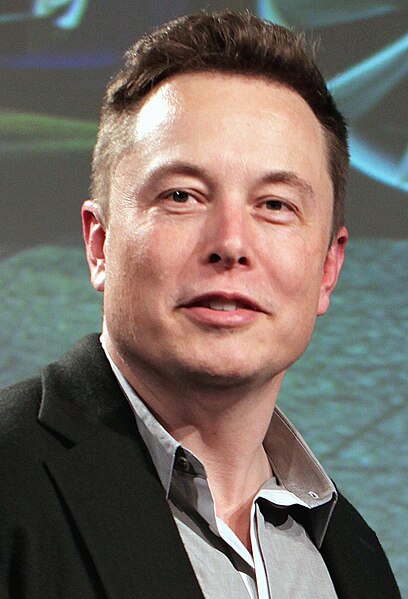

Elon Musk Claims We Only Have a 10 Percent Chance of Making AI Safe

Elon Musk Claims We Only Have a 10 Percent Chance of Making AI Safe

Elon Musk has put a considerable measure of thought into the unforgiving substances and wild potential outcomes of counterfeit consciousness (AI). These contemplations have abandoned him persuaded that we have to converge with machines in case we're to survive, and he's even made a startup devoted to building up the cerebrum PC interface (BCI) innovation expected to get that going. In any case, in spite of the way that his own one of a kind lab, OpenAI, has made an AI fit for showing itself, Musk as of late said that endeavors to make AI safe just have "a five to 10 percent possibility of achievement."

Musk shared these not as much as stellar chances with the staff at Neuralink, the previously mentioned BCI startup, as indicated by late Rolling Stone article. In spite of Musk's substantial contribution in the headway of AI, he's straightforwardly recognized that the innovation carries with it the potential for, as well as the guarantee of significant issues.

The difficulties to making AI safe are twofold.

The difficulties to making AI safe are twofold.

Initial, a noteworthy objective of AI — and one that OpenAI is now seeking after — is building AI that is more quick-witted than people, as well as that is equipped for adapting autonomously, with no human programming or obstruction. Where that capacity could take it is obscure.

At that point, there is the way that machines don't have ethics, regret, or feelings. Future AI may be equipped for recognizing "great" and "terrible" activities, yet particularly human emotions stay only that — human.

In the Rolling Stone article, Musk additionally expounded on the perils and issues that at present exist with AI, one of which is the potential for only a couple of organizations to basically control the AI area. He referred to Google's DeepMind as a prime illustration.

"Between Facebook, Google, and Amazon — and ostensibly Apple, yet they appear to think about security — they have more data about you than you can recollect," said Musk. "There's a great deal of hazard in the grouping of energy. So if AGI [artificial general intelligence] speaks to an extraordinary level of energy, should that be controlled by a couple of individuals at Google with no oversight?"

Worth the Risk?

Specialists are partitioned on Musk's statement that we most likely can't make AI safe. Facebook originator Mark Zuckerberg has said he's hopeful in regards to mankind's future with AI, calling Musk's notices "truly flighty." Meanwhile, Stephen Hawking has put forth open expressions wholeheartedly communicating his conviction that AI frameworks posture a sufficient hazard to humankind that they may supplant us by and large.

Sergey Nikolenko, a Russian PC researcher who has some expertise in machine learning and system calculations, as of late imparted his musings on the issue to Futurism. "I feel that we are as yet deficient with regards to the vital essential comprehension and procedure to accomplish genuine outcomes on solid AI, the AI arrangement issue, and other related issues," said Nikolenko.

With respect to the present AI, he supposes we don't have anything to stress over. "I can wager any cash that advanced neural systems won't all of a sudden wake-up and choose to oust their human overlord," said Nikolenko.

Musk himself may concur with that, yet his suppositions are likely more centered around how future AI may expand on what we have today.

As of now, we have AI frameworks equipped for making AI frameworks, ones that can impart in their own particular dialects, and ones that are normally inquisitive. While the peculiarity and a robot uprising are entirely sci-fi tropes today, such AI advance influences them to appear like honest to goodness conceivable outcomes for the universe of tomorrow.

Be that as it may, these feelings of dread aren't really enough motivation to quit pushing ahead. We additionally have AIs that can analyze tumor, recognize self-destructive conduct, and enable stop to sex trafficking.

The innovation can possibly spare and enhance lives all inclusive, so while we should consider approaches to make AI safe through future direction, Musk's expressions of caution are, eventually, only limited's conclusion.

He even said as much himself to Rolling Stone: "I don't have every one of the appropriate responses. Give me a chance to be truly certain about that. I'm attempting to make sense of the arrangement of moves I can make that will probably bring about a decent future. On the off chance that you have proposals in such manner, please disclose to me what they are."

Elon Musk has put a considerable measure of thought into the unforgiving substances and wild potential outcomes of counterfeit consciousness (AI). These contemplations have abandoned him persuaded that we have to converge with machines in case we're to survive, and he's even made a startup devoted to building up the cerebrum PC interface (BCI) innovation expected to get that going. In any case, in spite of the way that his own one of a kind lab, OpenAI, has made an AI fit for showing itself, Musk as of late said that endeavors to make AI safe just have "a five to 10 percent possibility of achievement."

Musk shared these not as much as stellar chances with the staff at Neuralink, the previously mentioned BCI startup, as indicated by late Rolling Stone article. In spite of Musk's substantial contribution in the headway of AI, he's straightforwardly recognized that the innovation carries with it the potential for, as well as the guarantee of significant issues.

The difficulties to making AI safe are twofold.

The difficulties to making AI safe are twofold.Initial, a noteworthy objective of AI — and one that OpenAI is now seeking after — is building AI that is more quick-witted than people, as well as that is equipped for adapting autonomously, with no human programming or obstruction. Where that capacity could take it is obscure.

At that point, there is the way that machines don't have ethics, regret, or feelings. Future AI may be equipped for recognizing "great" and "terrible" activities, yet particularly human emotions stay only that — human.

In the Rolling Stone article, Musk additionally expounded on the perils and issues that at present exist with AI, one of which is the potential for only a couple of organizations to basically control the AI area. He referred to Google's DeepMind as a prime illustration.

"Between Facebook, Google, and Amazon — and ostensibly Apple, yet they appear to think about security — they have more data about you than you can recollect," said Musk. "There's a great deal of hazard in the grouping of energy. So if AGI [artificial general intelligence] speaks to an extraordinary level of energy, should that be controlled by a couple of individuals at Google with no oversight?"

Worth the Risk?

Specialists are partitioned on Musk's statement that we most likely can't make AI safe. Facebook originator Mark Zuckerberg has said he's hopeful in regards to mankind's future with AI, calling Musk's notices "truly flighty." Meanwhile, Stephen Hawking has put forth open expressions wholeheartedly communicating his conviction that AI frameworks posture a sufficient hazard to humankind that they may supplant us by and large.

Sergey Nikolenko, a Russian PC researcher who has some expertise in machine learning and system calculations, as of late imparted his musings on the issue to Futurism. "I feel that we are as yet deficient with regards to the vital essential comprehension and procedure to accomplish genuine outcomes on solid AI, the AI arrangement issue, and other related issues," said Nikolenko.

With respect to the present AI, he supposes we don't have anything to stress over. "I can wager any cash that advanced neural systems won't all of a sudden wake-up and choose to oust their human overlord," said Nikolenko.

Musk himself may concur with that, yet his suppositions are likely more centered around how future AI may expand on what we have today.

As of now, we have AI frameworks equipped for making AI frameworks, ones that can impart in their own particular dialects, and ones that are normally inquisitive. While the peculiarity and a robot uprising are entirely sci-fi tropes today, such AI advance influences them to appear like honest to goodness conceivable outcomes for the universe of tomorrow.

Be that as it may, these feelings of dread aren't really enough motivation to quit pushing ahead. We additionally have AIs that can analyze tumor, recognize self-destructive conduct, and enable stop to sex trafficking.

The innovation can possibly spare and enhance lives all inclusive, so while we should consider approaches to make AI safe through future direction, Musk's expressions of caution are, eventually, only limited's conclusion.

He even said as much himself to Rolling Stone: "I don't have every one of the appropriate responses. Give me a chance to be truly certain about that. I'm attempting to make sense of the arrangement of moves I can make that will probably bring about a decent future. On the off chance that you have proposals in such manner, please disclose to me what they are."

No comments

Please do not spam.